Key Considerations for Scalable Web Application Development

- February 27, 2025

-

3278 Views

- by Ishan Vyas

It’s important to focus on a few critical aspects if you expect to grow and scale. Otherwise, performance issues will pile up, your application will slow down, crash, and become too expensive to maintain. Worse? Users will abandon it, and fixing the mess later will cost even more. You don’t want that, right?

You need to get ahead of all such challenges. The right approach ensures your system handles growth smoothly, keeps costs in check, and delivers a seamless user experience—no matter how much demand increases.

Now, let us guide you through the essentials of building a system that handles growth effortlessly.

Why Does Scalability Matter in Application Development?

What if you find out that your app crashes just when it starts gaining traction? Yes, that’s exactly what happens when scalability is ignored. Google found that 61% of users abandon an app after a single bad experience. If your system can’t handle growth, expect downtime, lost revenue, and frustrated customers.

So? Scalable app development matters for long-term stability, cost control, and smooth user experience. It:

- Maintains performance under heavy load, so the app can handle more users, transactions, and data without delays.

- Reduces operational costs, which allows resources to scale up or down instead of wasting money on idle infrastructure.

- Supports seamless business growth, means it becomes easier to add features and users without breaking functionality.

- Improves system reliability, which ensures uptime stays consistent even during peak usage.

- Prevents performance bottlenecks, just to keep traffic flow steady and stop crashes under high demand.

- Enables faster development and updates, so new features can roll out without disrupting operations.

- Enhances security as demand grows, adapting protections to secure user data as traffic increases.

- Optimizes user experience, which keeps interactions fast and smooth regardless of user volume.

- Prepares for unpredictable market demands, which helps apps handle sudden traffic spikes from events or trends.

- Future-proofs the application, all while removing the need for costly reworks by designing for scalability early.

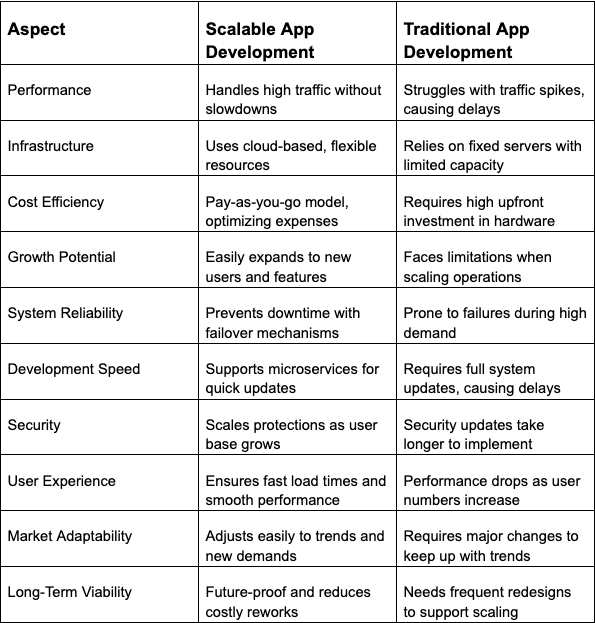

Scalable VS Traditional App Development

Key Considerations for Scalable Web Application Development

Now, let us walk you through the essential factors that ensure scalability in application development. Each aspect plays a role in performance, reliability, and future growth.

Scalable Architecture Right from the Start

You should plan for scalability before writing code because weak structures cause performance issues later. It’s for sure that strong foundations ensure smooth growth.

- Poor architecture leads to frequent downtime and expensive fixes.

- Scaling later becomes difficult without a solid foundation.

Yes, architecture decisions matter from day one.

Okay, so first, think about handling growth. Monolithic structures create bottlenecks. You get it, right? Performance slows as demand increases. But here’s the thing: Microservices work better. Each service runs independently, and developers can easily scale only necessary parts. Netflix moved to microservices. The result? Faster updates, better performance, and minimal downtime.

- Monolithic applications require scaling the entire system, not just specific parts.

- Microservices reduce risk by isolating failures within individual components.

Next, you need to consider serverless computing. Because automatic scaling prevents resource wastage. Platforms like AWS Lambda and Google Cloud Functions adjust resources instantly. There is no need to manage infrastructure. You’ll see how costs stay low during inactive periods, and traffic spikes never cause failures.

- Serverless computing eliminates the need for manual resource allocation.

- Costs stay optimized since resources scale up and down as needed.

Event-driven architecture improves responsiveness. Systems react only when triggered—efficiency increases. Server load reduces. For instance, uber uses event-driven patterns, which ensures that ride-matching can handle millions of requests without delays. Ultimately, the performance stays stable under high demand.

- Unnecessary background processes waste resources and slow down performance.

- Event-driven systems allocate resources only when required, improving efficiency.

For instance, SaaS application development requires handling unpredictable traffic. Platforms like Shopify experience extreme demand surges during sales events. A cloud-based microservices setup scales resources instantly. So, pages load fast and transactions stay smooth. No scalable architecture? Slowdowns and downtime cost businesses millions.

- High-traffic events expose weaknesses in poorly designed architectures.

- Cloud-based solutions ensure systems expand automatically under heavy load.

Finally, document architecture decisions. Clear guidelines prevent future issues. Poor planning creates complex scaling challenges. Early decisions save time, control costs, and keep performance reliable.

- Lack of documentation slows down development and troubleshooting.

- Well-documented systems allow teams to scale efficiently without confusion.

Cloud Infrastructure for Flexibility

You should rely on cloud infrastructure to scale efficiently. Traditional servers limit flexibility. Manual scaling takes time and increases costs. Cloud platforms adjust resources based on demand. By focusing on cloud cost optimization, organizations can overcome the limitations of traditional servers that hinder flexibility.

- On-premise infrastructure struggles with sudden traffic spikes.

- Manual scaling delays response times and increases operational effort.

Why struggle with outdated infrastructure when cloud solutions handle scaling automatically?

Because when traffic surges, you need immediate scalability. Cloud providers like AWS, Azure, and Google Cloud expand capacity in real-time without manual intervention. Resources scale up during peak usage and shrink when demand drops. How else can you ensure a smooth user experience without overpaying for idle resources?

- Cloud-based auto-scaling adjusts capacity instantly, preventing slowdowns.

- Unused resources automatically scale down, reducing unnecessary costs.

If you want cost-effective scaling, cloud computing offers the best solution. Physical servers require large upfront investments. Unused infrastructure drains money. Cloud services follow a pay-as-you-go model. You only pay for what you use. Why waste resources when cloud platforms optimize costs automatically?

- Traditional infrastructure requires expensive hardware maintenance.

- Pay-as-you-go pricing helps businesses scale without financial waste.

Now, think about performance. Auto-scaling and load balancing distribute traffic efficiently. Applications stay responsive even under heavy load. Netflix relies on AWS auto-scaling to support millions of users worldwide. What happens when your app gains traction? Without cloud scalability, performance will suffer.

- Load balancers prevent servers from getting overwhelmed during peak hours.

- Auto-scaling ensures applications remain fast and stable under high demand.

Finally, you should select the right cloud model. Public clouds offer affordability and quick deployment. Private clouds provide enhanced security for sensitive data. Hybrid models combine both benefits. Many businesses today also leverage multi-cloud backup solutions to enhance data resilience and reduce dependency on a single provider. Which one suits your application best? The right choice ensures long-term scalability and stability.

- Public clouds work best for startups and businesses needing quick scaling.

- Private clouds enhance security for industries handling sensitive information.

- Hybrid models allow businesses to balance cost, control, and security.

Optimized DNS Lookup for Faster Performance

You should minimize DNS lookup time to improve speed. Every request starts with a DNS query. Slow lookups delay page loading. Optimizing DNS ensures faster responses and a better user experience.

- High DNS lookup times increase website latency and frustrate users.

- Faster DNS resolution enhances speed and improves engagement.

Okay, so first, understand how DNS impacts performance. When a user visits your site, the browser requests the IP address from a DNS server by querying that domain’s DNS records. If lookup times are high, pages take longer to load. Faster DNS resolution reduces latency and improves responsiveness.

- Slow DNS lookups cause visible delays in page loading.

- Efficient DNS management minimizes unnecessary wait times.

Next, consider using a reliable DNS provider. Cloudflare, Google DNS, and AWS Route 53 offer faster resolution times. You can also consider using Cloudflare alternatives. A global network of DNS servers reduces lookup delays. Shorter response times keep applications running smoothly.

- Poor DNS providers lead to inconsistent performance and outages.

- A globally distributed DNS system improves response times across regions.

Just know that caching DNS responses can speed things up even more. Browsers store DNS records for repeated requests. A well-configured TTL (Time to Live) ensures that cached records remain fresh. In fact, lowering unnecessary DNS queries improves overall performance.

- Cached DNS responses prevent redundant queries and reduce lookup times.

- A well-optimized TTL balances fresh data with high-speed access.

For instance, global platforms like Zoom handle massive traffic with low-latency DNS infrastructure. Because optimized DNS ensures seamless video calls without delays. Without proper DNS management, slow lookups disrupt connectivity and user experience.

- Large-scale applications depend on DNS speed for uninterrupted service.

- Poor DNS configurations lead to frequent disconnections and buffering.

Finally, you need to monitor DNS performance regularly. High lookup times indicate inefficiencies. You can use tools like DNSPerf and Pingdom to track DNS response speeds. After all, faster DNS resolution ensures stable and high-performing applications.

- Regular DNS monitoring helps detect slow queries before they impact users.

- Performance tracking tools assist in optimizing DNS response times.

Microservices for Modular Scalability

You need a system that grows with your business. A monolithic structure might work initially, but increasing demand slows everything down. One small failure can bring the entire application down. Updates take longer and scaling becomes expensive. So, what’s the solution? Microservices.

- Monolithic systems make scaling costly and inefficient.

- A single failure in a monolith can disrupt the entire application.

First, understand why microservices work better. Instead of one massive system, microservices break your application into smaller, independent services. Each service runs on its own, handling a specific function. User authentication, payments, notifications—everything operates separately. This means you scale only what’s necessary. No more wasting resources on parts of the system that don’t need extra power.

- Each microservice functions independently, improving scalability.

- Isolating components reduces system-wide failures.

Next, think about how microservices improve flexibility. Teams work on different services without affecting others. A new feature rolls out without disrupting the entire application. Deployment happens faster. Downtime reduces. That’s why companies like Netflix and Amazon switched to microservices. So, that’s how they can handle millions of users daily without system-wide failures.

- Independent deployments make updates faster and reduce downtime.

- Large-scale applications rely on microservices for stability.

Now, let’s talk about managing microservices effectively. If you run multiple services, it means your system is required to handle continuous communication between them. That’s why it is suggested to use API gateways to help the services communicate with each other securely. Containerization tools like Docker and Kubernetes manage deployment. You should know that cloud platforms offer built-in support for microservices, which makes scaling even easier. In Kubernetes environments, the Kubernetes CSI driver helps manage persistent storage needs for containerized microservices.

- API gateways streamline communication between microservices.

- Containerization tools simplify scaling and deployment.

Finally, structure your microservices the right way. Poorly designed microservices create unnecessary dependencies. Each service must handle one function and remain independent. Clear API definitions keep communication smooth. Because well-structured microservices architecture prevents bottlenecks, improves efficiency, and ensures long-term scalability.

- Unstructured microservices design pattern creates hidden dependencies and slow performance.

- A well-planned architecture ensures long-term stability and scalability.

Optimized Databases for High Performance

Let’s say you have completed your SaaS application development and successfully launched. But then, traffic starts growing. More users sign up. More data flows in. Suddenly, pages take forever to load and requests fail. The database struggles to keep up. So, you need a strategy that keeps performance high, no matter the demand.

- Unoptimized databases slow down as traffic increases.

- Poor query handling leads to failed requests and downtime.

Yes, think of the thousands of queries hitting your database every second. Without optimization, every request scans entire tables. It ultimately, slows everything down. You need to fix this with proper indexing. Because indexes act like a roadmap, which helps the database find information quickly. Search engines use indexing to deliver instant results. Without it, even simple searches would take too long.

- Full-table scans consume unnecessary processing power.

- Indexing reduces search time and improves query performance.

Now, let’s say there’s only one massive database handling all user data. It works at first, but as the dataset grows, queries take longer. Fix this with sharding. Instead of storing everything in one place, split data across multiple databases. Platforms like instanavigation and Instagram use sharding to manage billions of posts efficiently. Your system needs the same efficiency to scale smoothly.

- A single database slows down under heavy load.

- Sharding distributes data efficiently across multiple servers.

Let’s talk about caching—because fetching the same data repeatedly wastes resources. Whenever a user loads a page, the database processes the same requests. You can fix this with caching tools like Redis or Memcached. Frequently accessed data stays in memory, reducing database load and speeding up response times. That’s how social media platforms keep feeds fast and responsive.

- Repeated database queries increase server load unnecessarily.

- Caching reduces response times and improves user experience.

Okay, you have optimized your database, but what happens over time? Slow queries return, storage fills up, and performance drops. You need to ensure long-term efficiency with regular maintenance, clean up unused indexes, and analyze query performance.

- Database performance declines without ongoing optimization.

- Routine maintenance prevents slow queries and excessive storage use.

It’s worth noting that monitoring tools like New Relic or Datadog can be used to catch issues before they affect users.

- Proactive monitoring detects database issues early.

- Real-time analytics help maintain performance and stability.

Load Balancing to Distribute Traffic

Servers keep crashing, pages keep forever to load, and users keep refreshing. This is what happens when too much traffic hits a single server. Yes, if your system cannot handle sudden spikes, it becomes unreliable.

- Single-server setups struggle with high traffic loads.

- Unbalanced traffic leads to crashes and poor user experience.

How about you sort it with a traffic manager who distributes the load evenly? That’s exactly what load balancing does. Instead of overwhelming one server, requests spread across multiple servers. No single machine carries all the weight. In fact, Amazon, Netflix, and Google use this to keep services running smoothly—no matter how many users log in.

- Load balancers distribute requests across multiple servers for stability.

- High-traffic platforms rely on balancing to prevent downtime.

Yes, handling traffic spikes shouldn’t feel like a crisis. Auto-scaling works with load balancers to add or remove servers as needed. More users show up? New servers activate. Demand drops? Extra resources shut down. This way, performance stays high while costs stay low.

- Auto-scaling dynamically adjusts resources to match demand.

- Over-provisioning wastes money, while under-provisioning causes slowdowns.

And what about users who need consistency? Customers who add items to a shopping cart or complete a bank transaction shouldn’t be bounced between different servers. Sticky sessions ensure related requests go to the same machine, preventing lost data and user frustration.

- Inconsistent session management leads to failed transactions.

- Sticky sessions ensure seamless experiences for returning users.

But what if a server crashes? Without a backup plan, downtime happens. Basically, a failover system detects failures and reroutes traffic to healthy servers. The fun part? User don’t even even notice any problem, ever. That’s how major platforms stay online 24/7.

- Failover mechanisms prevent service disruptions.

- Redundant servers keep applications running during failures.

See. Load balancing turns unpredictable traffic into a controlled, seamless experience. No crashes, no slowdowns. Just smooth, uninterrupted service. So, put it in place early in your SaaS application development. It will keep your system running.

- Early implementation prevents scaling issues later.

- A well-balanced system ensures long-term reliability and performance.

APIs to Handle High Demand Efficiently

Design your APIs with future growth in mind, or they will become your system’s weakest link. As your app scales, slow APIs create bottlenecks. Users experience delays. Transactions fail. The entire application suffers. So yes, your APIs must be built for speed, efficiency, and scalability.

- Poorly designed APIs slow down application performance.

- Bottlenecks lead to failed transactions and frustrated users.

First, keep API responses lean and efficient. Large payloads slow down data transfer. Only return the essential information. Use pagination for large datasets instead of flooding responses with unnecessary data. Frequent catching of the requested information prevents repeated database queries, which reduces load and improves speed.

- Large payloads increase response time and bandwidth usage.

- Pagination prevents excessive data from overwhelming API calls.

Now, think about handling thousands of requests at once. If your API struggles under heavy traffic, everything else slows down. So, load balancing distributes incoming requests across multiple servers, which prevents overload. See, auto-scaling ensures resources expand dynamically when demand spikes.

- High-traffic APIs need load balancing to distribute requests evenly.

- Auto-scaling prevents downtime and maintains API responsiveness.

That’s how platforms like Stripe and Twilio handle millions of API calls per second without breaking a sweat.

Remember that security is just as important as performance in SaaS application development. Without proper protections, APIs become a target for abuse. Rate limiting controls how often users can make requests, all while preventing system overload. You can try OAuth 2.0 to ensure that only authorized users access your services.

- Unsecured APIs are vulnerable to attacks and excessive traffic.

- Rate limiting prevents API abuse and maintains system stability.

Finally, plan for long-term API stability. Versioning prevents breaking changes when new features roll out. Asynchronous processing speeds up request handling by freeing up server resources. After all, well-structured API documentation makes integration seamless for developers.

- API versioning ensures backward compatibility with older clients.

- Asynchronous processing improves response times and server efficiency.

Bottom Line

The scalability of your app starts with the right architecture, cloud infrastructure, and database optimization. Load balancing and efficient APIs keep performance high. Automation, security, and real-time monitoring ensure stability. Everything matters to ensure smooth scalability in every phase of your mobile & web application development process.

Every decision you make today impacts future growth —so plan and build for scale.

SaaS Development

SaaS Development Web Application Development

Web Application Development Mobile Application Development

Mobile Application Development Custom Software Development

Custom Software Development Cloud Development

Cloud Development DevOps Development

DevOps Development MVP Development

MVP Development Digital Product Development

Digital Product Development Hire Chatbot Developers

Hire Chatbot Developers Hire Python Developers

Hire Python Developers Hire Django Developers

Hire Django Developers Hire ReactJS Developers

Hire ReactJS Developers Hire AngularJS Developers

Hire AngularJS Developers Hire VueJS Developers

Hire VueJS Developers Hire Full Stack Developers

Hire Full Stack Developers Hire Back End Developers

Hire Back End Developers Hire Front End Developers

Hire Front End Developers AI Healthcare Software Development & Consulting

AI Healthcare Software Development & Consulting Healthcare App Development

Healthcare App Development EHR Software Development

EHR Software Development Healthcare AI Chatbot Development

Healthcare AI Chatbot Development Telemedicine App Development Company

Telemedicine App Development Company Medical Billing Software Development

Medical Billing Software Development Fitness App Development

Fitness App Development RPM Software Development

RPM Software Development Medicine Delivery App Development

Medicine Delivery App Development Medical Device Software Development

Medical Device Software Development Patient Engagement Software Solutions

Patient Engagement Software Solutions Mental Health App Development

Mental Health App Development Healthcare IT Consulting

Healthcare IT Consulting Healthcare CRM Software Development

Healthcare CRM Software Development Healthcare IT Managed Services

Healthcare IT Managed Services Healthcare Software Testing services

Healthcare Software Testing services Medical Practice Management Software

Medical Practice Management Software Outsourcing Healthcare IT Services

Outsourcing Healthcare IT Services IoT Solutions for Healthcare

IoT Solutions for Healthcare Medical Image Analysis Software Development Services

Medical Image Analysis Software Development Services Lending Software Development Services

Lending Software Development Services Payment Gateway Software Development

Payment Gateway Software Development Accounting Software Development

Accounting Software Development AI-Driven Banking App Development

AI-Driven Banking App Development Insurance Software Development

Insurance Software Development Finance Software Development

Finance Software Development Loan Management Software Development

Loan Management Software Development Decentralized Finance Development Services

Decentralized Finance Development Services eWallet App Development

eWallet App Development Payment App Development

Payment App Development Money Transfer App Development

Money Transfer App Development Mortgage Software Development

Mortgage Software Development Insurance Fraud Detection Software Development

Insurance Fraud Detection Software Development Wealth Management Software Development

Wealth Management Software Development Cryptocurrency Exchange Platform Development

Cryptocurrency Exchange Platform Development Neobank App Development

Neobank App Development Stock Trading App Development

Stock Trading App Development AML software Development

AML software Development Web3 Wallet Development

Web3 Wallet Development Robo-Advisor App Development

Robo-Advisor App Development Supply Chain Management Software Development

Supply Chain Management Software Development Fleet Management Software Development

Fleet Management Software Development Warehouse Management Software Development

Warehouse Management Software Development LMS Development

LMS Development Education App Development

Education App Development Inventory Management Software Development

Inventory Management Software Development Property Management Software Development

Property Management Software Development Real Estate CRM Software Development

Real Estate CRM Software Development Real Estate Document Management Software

Real Estate Document Management Software Construction App Development

Construction App Development Construction ERP Software Development

Construction ERP Software Development