Integrating Human Factors and Ergonomics in AI for Healthcare

Contents

- Foreword

- Executive Summary

- AI in Healthcare

- The adoption of AI is endorsed by decision-makers

- AI is emerging across various healthcare domains, with diagnostics at the forefront

- Governments are investing in AI advancements for healthcare

- The true hurdle for AI lies in seamless incorporation into practical clinical settings

- Human Factors and Ergonomics

- Human Factors and Ergonomics adopts a holistic system outlook

- The scope of examination extends beyond mere technology

- HF/E prioritizes user-focused design, collaborative design, and joint creation of systems

- Levels of Automation

- AI deployment introduces both familiar and novel obstacles

- Various configuration choices exist for incorporating AI into medical frameworks

- Applying HF/E in Healthcare AI

- Situation awareness

- Workload

- Automation bias

- Explanation and trust

- Human – AI Teaming

- Training

- Interactions among personnel and patients

- Ethical considerations

- Conclusions

- References

- Acknowledgements

- Our collaborators

Foreword

This document presents a perspective on human factors and ergonomics (HF/E) in the context of artificial intelligence (AI) deployment within healthcare settings. It draws from extensive collaboration, spearheaded by experts in the field and bolstered by a diverse array of specialists and global influencers.

The goal is to foster a holistic approach among creators, overseers, acquirers, and operators of AI tools, while highlighting the critical involvement of individuals interacting with or influenced by these technologies.

Citrusbug's AI and Digital Health Initiative was established in 2022 to highlight and advance the application of HF/E in crafting and implementing digital solutions and AI for healthcare environments.

This initiative seeks to cultivate a collaborative network, comprising certified ergonomists, AI specialists, medical practitioners, administrative leaders in healthcare, tech innovators, and professionals in regulatory fields.

The Citrusbug Technology Institute earned its accreditation in 2023, affirming the distinctiveness and significance of the HF/E field and the institute's leading position in advocating for the discipline and profession globally.

This encompasses the safeguarded designation of "Accredited Ergonomics and Human Factors Expert" with the abbreviation A.ErgHF granted to active certified members and fellows, part of an elite cadre exceeding 600 professionals operating at an international caliber.

We take great pride in the accomplishments of this paper and are confident it plays a vital role as AI expands its influence and effects in healthcare.

Executive Summary

This document highlights eight essential principles and techniques from human factors and ergonomics (HF/E) pertinent to the creation and application of artificial intelligence (AI) in medical contexts.

Readers can utilize these eight HF/E principles to evaluate their own practices and encounters with AI, whether as innovators of the tech, end-users, overseers, or sponsors of studies. The intent is to initiate dialogue and elevate consciousness regarding the significance of individuals engaging with or impacted by AI healthcare integration.

AI is viewed as the subsequent advancement in information technology to tackle medical challenges. When executed effectively, AI might aid medical professionals through clinical decision intelligence, offer forecasts to enhance operational efficiencies in care delivery, and fundamentally alter how medical services are provided and obtained.

Diagnostic tools leveraging AI are pioneering this space, for instance, AI systems analyzing imaging scans to detect conditions like pneumonia or to distinguish COVID-19 from other respiratory ailments. Additional instances of medical AI tools encompass patient-oriented conversational agents, apps for psychological well-being, emergency dispatch prioritization, sepsis identification and forecasting, appointment coordination, resource allocation, quality enhancement efforts, and even aiding in the formulation of COVID-19 immunizations.

Yet, the ambition to employ AI for boosting health system productivity and improving patient security, satisfaction, and employee health is presently undermined by an overly narrow emphasis on tech that opposes humans to machines ("human versus algorithm"), coupled with scant documentation of AI in actual operational environments.

The primary obstacles for AI healthcare integration are expected to surface as algorithms become embedded within medical frameworks to offer services alongside medical staff. The challenge of patient safety in AI systems becomes paramount. It is within this broader medical framework level, where groups of medical experts and AI tools collaborate through human-AI teaming healthcare to deliver services, that HF/E concerns will become prominent.

Hence, grasping human factors and ergonomics principles is crucial. HF/E is a field dedicated to designing sociotechnical frameworks to boost comprehensive system efficiency, security, and individual health. From this viewpoint, HF/E supplies concepts and methodologies to aid the creation and utilization of AI throughout its lifecycle within the larger framework.

Innovators and creators of AI, those tasked with acquiring AI tools, regulators, and entities financing research must transcend the tech-focused lens and adopt a systemic approach to AI, meaning to account from the beginning for human-AI interactions within the extensive medical and health framework.

Such endeavors must be supported by training in and assistance with HF/E, accessible to medical professionals and institutions.

Citrusbug's vision, along with its domestic and global collaborators, is that heightened awareness and proficient implementation of HF/E concepts and methods in real-world scenarios are essential facilitators for successfully incorporating AI into health frameworks.

The aim of this document – to spark discussion on the importance of humans in designing and using medical AI – is humble but essential, as all health sector stakeholders must collaborate to progress the bold endeavor of medical AI. That said, it is wise to clarify what this document does not aim to offer: it is neither a complete primer on HF/E nor a step-by-step manual for practitioners. References to additional materials and helpful tools are included in the bibliography, and Citrusbug introduced in September 2023 a Healthcare HF/E Training Program, delivering thorough instruction on the concepts outlined herein.

Eight HF/E Principles for Effective AI Deployment in Medical Settings

AI in Healthcare

Anticipations for AI's role in medical services are elevated. Emphasis has been placed on the prospective health and financial gains from broad AI adoption. This is supported by the formation of specialized entities and substantial public investments to expedite AI's growth and incorporation into health services. For instance, in various nations, dedicated units have been created to hasten digital evolution in national health systems, often with significant funding allocations. In a prominent address in early 2020, a health minister articulated the aspiration for a digitized health service, stating that tech is indispensable for contemporary health frameworks to confront their challenges.

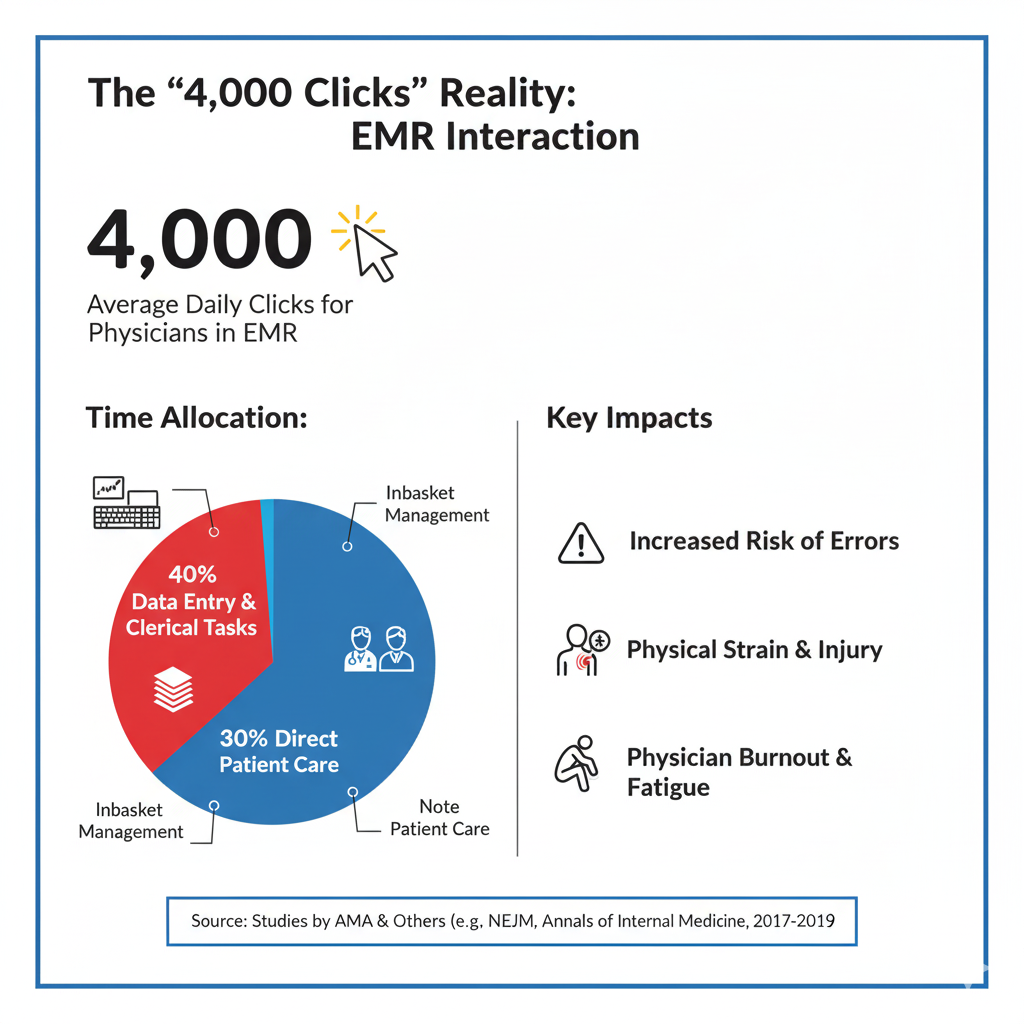

Health frameworks grapple with escalating expenses, personnel deficits and exhaustion, a growing aged populace with intricate health demands, and outcomes that frequently underperform. Digital tools have been utilized to mitigate some issues, like accelerating and broadening data and knowledge dissemination. For example, experts can partner across locations and even worldwide to address complex requirements and enhance results. Nonetheless, digital tool introductions have often generated extra issues, such as disruptions in service delivery, harm to patients, and professional burnout, stemming from inadequate compatibility, ease of use, and disregard for systemic implementation, i.e., without proper regard for the interplay between the digital tool and other framework components.

AI is regarded as the next progression in digital solutions for health and care dilemmas. If implemented properly, AI could assist medical experts in their evaluations, produce estimates to optimize care process inefficiencies, and dramatically change service provision and access. For instance, AI tools might alleviate shortages of qualified medical staff by filtering imaging scans to pinpoint those needing further human review or by offering support in diagnostics. Likewise, AI utilizing natural language analysis could process and condense the vast annual medical publications, supplying health workers with current data to facilitate evidence-based practice adoption. AI could also boost logistical efficiency, such as through AI-driven scheduling that targets inefficiencies in supply chains and resource shortages impacting health and care quality, ultimately influencing patient results. Thus, the goal is that successful AI technology adoption will aid in enhancing healthcare durability and outcomes.

AI is beginning to appear in every facet of medical services, with diagnostics pioneering

A prominent area of use involves AI-powered conversational agents, such as symptom evaluators for patients to aid self-assessment and prioritization. These agents allow patients to describe issues in everyday language interactively, directed by AI inquiries and suggestions. The implementation of explainable AI medical systems in these applications can then propose probable diagnoses and advise on the urgency of professional consultation.

Psychological health represents another domain attracting considerable AI attention. Tools have been created to spot depression, e.g., via voice engagements with the tool or social media entries, or to administer cognitive behavioral interventions through an AI conversational agent.

The possible domains for AI in medical services appear boundless, with further encouraging advancements under trial, such as in emergency dispatch prioritization, sepsis detection and outlook, patient booking, resource planning, and quality improvement initiatives. In the longer term, AI enabling tailored medicine, i.e., data-guided treatments and health oversight considering genetic variations and personal factors, promises much. Moreover, there is considerable enthusiasm for AI in supporting pharmaceutical and immunization development, particularly post the COVID-19 outbreak. Immunization creation usually spans years, but AI methods aiding COVID-19 immunization development, e.g., by unraveling the virus's genetic structure, led to vital immunizations in unprecedented timeframes.

Governments are investing in AI advancements for healthcare

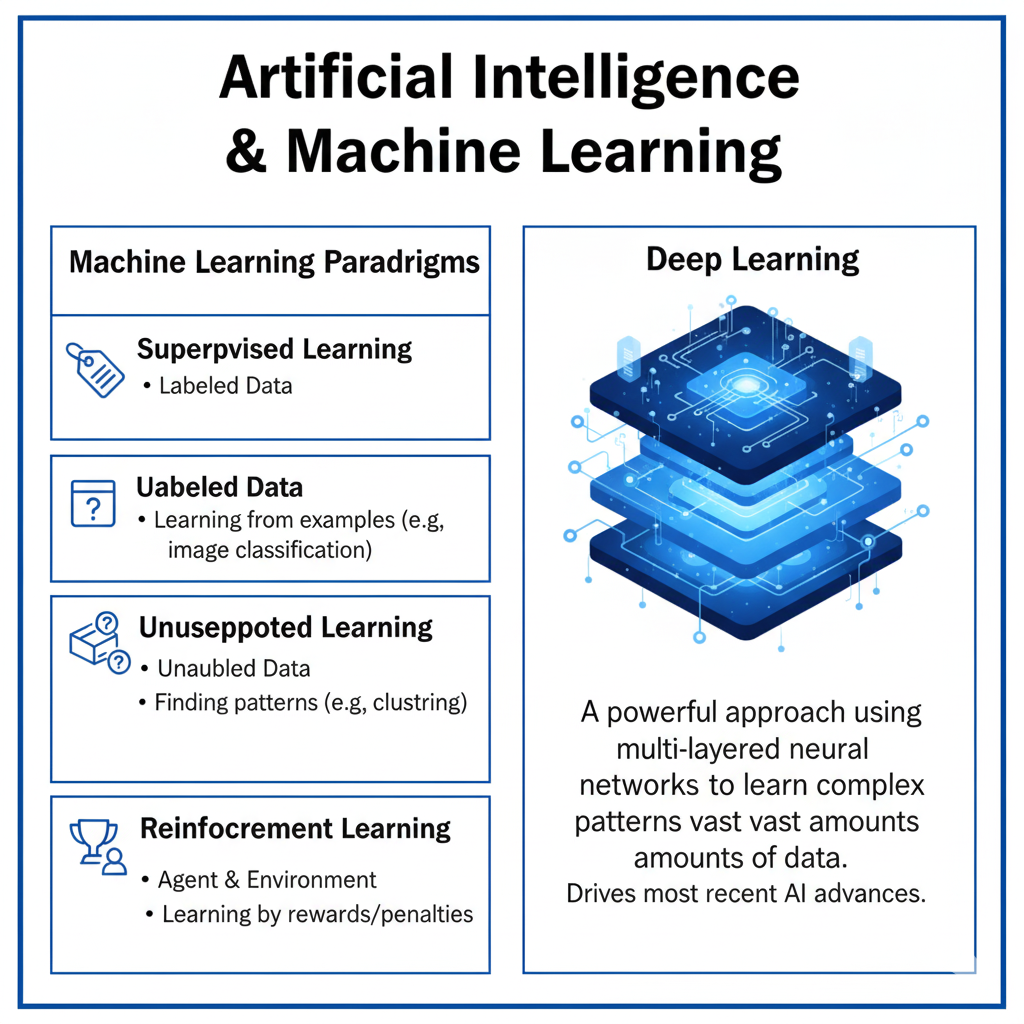

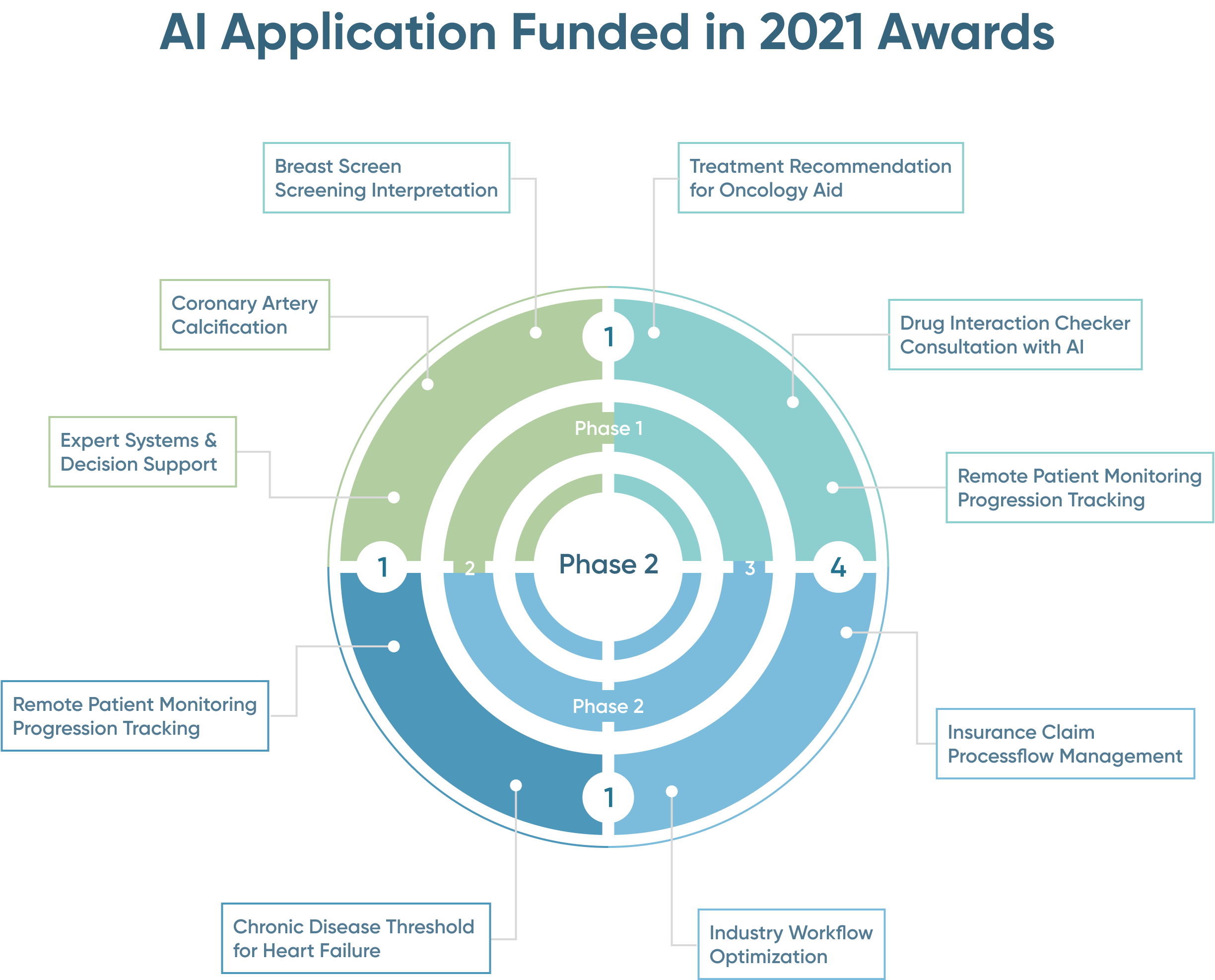

In numerous regions, health AI labs back the testing of AI tools via award schemes, with dedicated budgets over multi-year spans. These cover AI tools at four development stages, encompassing viability analyses, medical assessments, real-world trials, and preliminary health system adoption. In 2021, multiple AI tools across stages received funding in award cycles. While most are in initial phases, several are at preliminary adoption. Diagnostics dominate, especially at adoption stages (phase 4).

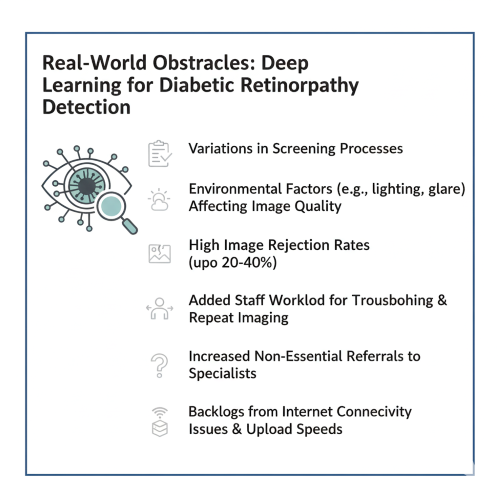

The genuine obstacle for AI is embedding into practical medical contexts

Assessment studies of various AI algorithms yield promising outcomes, with numerous suggesting AI performance matches or exceeds human specialists, e.g., in spotting skin malignancies or diabetic eye disease. However, these studies typically concentrate on AI's efficacy for a tightly scoped task. AI tech utilization in medical practice, like deep learning, remains nascent, and medical professionals are still acclimating to them. The current evidence reflects this, weakened by: evaluations usually conducted by tech creators, with independent reviews rare; small human participant numbers; and infrequent forward-looking trials. A comprehensive review in a medical journal determined that assertions of AI surpassing humans are probably exaggerated due to flaws in study setup, documentation, openness, and bias risks.

Comparable findings emerged from a review of machine learning-based medical decision aids, showing no solid proof such systems boost clinician efficacy when augmenting rather than supplanting human judgment. A scarce independent external validation example revealed a popular sepsis forecasting model exhibited markedly reduced accuracy than internal validations. Furthermore, the model generated numerous false warnings, adding to alert exhaustion.

Developers and independent assessments are the exception; participant counts are limited; and prospective studies are uncommon. A systematic analysis in a leading journal concluded that claims of AI outperforming humans are likely inflated given study design, reporting, and transparency limitations, and bias risks. Similar outcomes were noted in a review of machine learning clinical decision aids, indicating no robust evidence of clinician performance improvement when augmenting human intelligence. A rare independent external validation found a widely used sepsis prediction model had significantly lower accuracy than reported internally, and produced many false alerts, contributing to alert fatigue.

The primary challenges for AI adoption will likely emerge when algorithms integrate into medical systems to provide services collaboratively with professionals and other tech. At this system level, where teams of professionals and AI collaborate to offer services, HF/E issues will surface.

Human Factors and Ergonomics

Human factors and ergonomics (HF/E) is defined by global associations as "the scientific field focused on comprehending interactions between humans and other system elements, and the practice that employs theory, principles, data, and methods to design for optimizing human health and overall system efficacy."

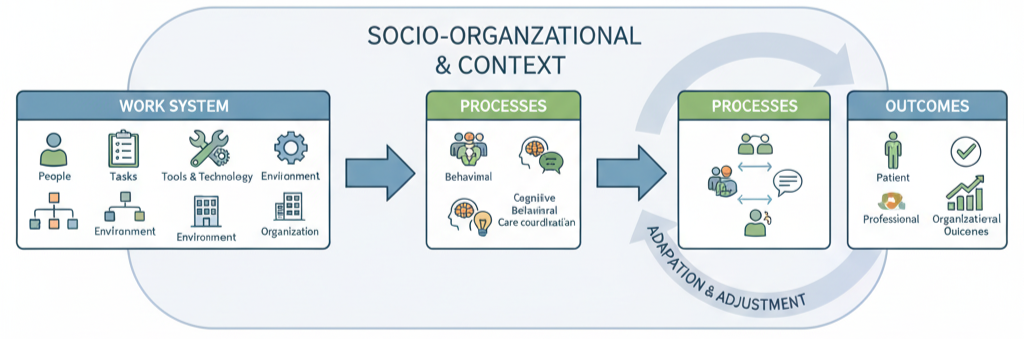

Medical systems or operational systems are sociotechnical, where individuals collaborate toward common objectives, utilizing tools and tech, performing tasks, adhering to guidelines and unwritten norms, and operating in environments with distinct traits. These systems exist within broader professional, regulatory, and societal contexts. The array of individuals and interactions in the sociotechnical operational system generate care processes and others, like screening for diseases and infection control, shaping results.

Grasping medical system operations involves examining system elements and their interplays. Various HF/E models aid in adopting a systemic view. The Systems Engineering for Patient Safety (SEIPS) is a commonly used model tailored for healthcare. SEIPS describes element interactions producing processes leading to outcomes.

The latest SEIPS iteration (SEIPS 3.0) centers the patient in the sociotechnical system. The patient's health and well-being outline a pathway spanning years, involving multiple health and social providers, each a distinct operational system in its socio-organizational setting.

A comprehensive view for HF/E integration into healthcare comes from the Model for Ergonomics Integration in Health Systems (MIEHS). This addresses system attributes like coverage, durability, wholeness, and adaptability at micro, meso, and macro scales.

The scope of examination extends beyond mere technology

- Adopting a systemic view clarifies that introducing new tech, like an independent infusion device in critical care or an AI-based emergency call triage, does not merely substitute a human function but can fundamentally alter the operational system.

- Responsibility for healthcare system performance is shared beyond frontline staff, encompassing supervisory, managerial, regulatory, and policy stakeholders. For AI in healthcare, this includes not just users but designers, developers, regulators, and those establishing design standards.

HF/E prioritizes user-focused design, collaborative design, and joint creation of systems

- Adopting a systemic view clarifies that introducing new tech, like an independent infusion device in critical care or an AI-based emergency call triage, does not merely substitute a human function but can fundamentally alter the operational system. Standard HF/E design guidelines and norms ensure tech integrates effectively into healthcare's complex realities. User-centered AI design is a methodology for building interactive systems emphasizing users' requirements, incorporating ergonomics in AI techniques to render systems functional and beneficial. User-centered design processes improve efficacy and productivity, boost human health and user contentment, enhance access and durability, and mitigate adverse usage effects on health, security, and efficacy./li>

- User-centered design commences with stakeholder identification and needs, then actively engages them in collaborative design and joint production of tech and systems. Collaborative design and production involve partnerships among system workers, those with direct system experiences (patients and families/carers), and designers of tech and other system components, leveraging each's knowledge, assets, and inputs.

- A key element of collaborative design and production is formulating success metrics from multiple stakeholder viewpoints. For health systems in certain regions, guides exist for best practices in digital and data-guided health tech outlining value propositions and outcome metrics for organizations, staff, and patients. Defining a spectrum of metrics for AI's value in healthcare is challenging, but HF/E can assist by mapping system component interplays and identifying outcome, process, and balancing metrics, i.e., AI's potential ripple effects on task execution, staff social dynamics, patient relationships, trust, roles, duties, and workflows.

Levels of Automation

AI deployment introduces both familiar and novel obstacles

Understanding levels of automation AI is crucial for effective implementation. As automation scaled in industrial settings during the 1970s and 1980s, HF/E studies on 'automation surprises' and 'automation paradoxes' clarified issues post-automation introduction. The core misconception is assuming automation replaces humans, but it actually transforms human roles.

However, "smart" systems pose entirely new challenges absent in traditional automation design. Intelligent entities can enhance human activities in unprecedented ways when machines merely substituted physical labor. Interactions with linked AI systems might evolve into human-AI relationships, particularly if AI expresses personality-like traits via interfaces. Social elements gain relevance, alongside mutual comprehension of behaviors and standards. Consider common voice-activated virtual aides (e.g., Amazon Alexa or Apple Siri) aiming for natural social exchanges. Health and care examples include mental health bots and helper robots. These human-tech relationships, with social, cultural, and moral facets, hold greater weight for AI systems than traditional automation. Medical professionals, patients, and AI will increasingly partner within broader medical systems.

Various configuration choices exist for incorporating AI into medical frameworks

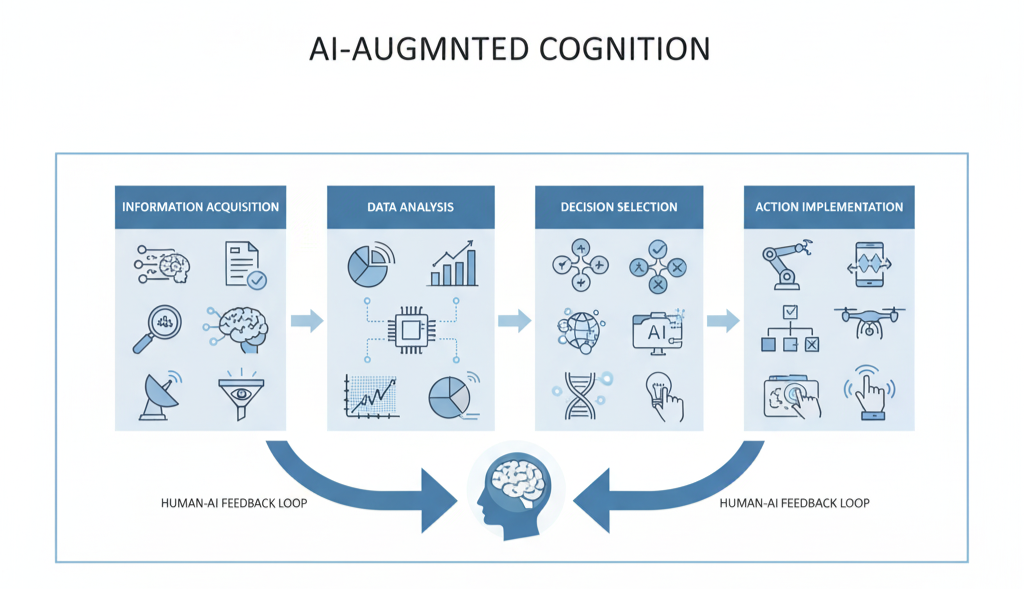

Automation literature proposes automation level classifications to aid designers in deciding which functions to automate and to what degree. These span from no automation to complete without human involvement, through interim levels. Such classifications underscore how automation choices impact human efficacy, affecting workload, situational understanding, trust, reliance, and competencies. Systemically, automation scope criteria must exceed technical viability.

Automation level classifications can pair with human information processing models, segmenting human actions into stages like data gathering (perception/sensing), data analysis (comprehension/sense-making), choice selection (option evaluation, constraints, consequences), and action execution. This aids in contemplating varied human-AI interactions, e.g., for machine learning medical devices.

These basic categorizations can yield insights on how varying automation degrees affect human efficacy in diverse scenarios.

Contemporary human-centered AI thinking expands one-dimensional automation level classifications by adding distinct autonomy levels for humans and AI, forming a two-dimensional matrix. This emphasizes that boosting AI autonomy need not reduce human autonomy. Instead, AI could enhance human actions, affording autonomy to both rather than one or the other.

Applying HF/E in Healthcare AI

A systemic viewpoint using the SEIPS model AI framework and levels of automation AI classifications are valuable instruments for designers and leaders to better comprehend AI's potential effects in healthcare and available interaction design options. Here, we emphasize core ergonomics AI healthcare principles relevant to AI design, rollout, and use.

Situational understanding (SA)

Medical workers require solid comprehension of current scenarios, including patients, their requirements and unique situations, medical backgrounds, and ongoing care plans, informing future decisions and patient pathway management. This evolving grasp is termed situational awareness (SA). SA is vital for patients too, e.g., health knowledge, understanding info sharing needs, concern escalation, questioning, and informed consent. Assessing SA impacts is key in AI design, rollout, and use. This encompasses:

- SA needs for people and AI systems (what each requires for effective operation);

- How people and AI exchange SA info (what, when, format); and

- How people comprehend AI actions and its SA.

Formally, SA is knowledge state from a dynamic process at three rising (not always sequential) levels: relevant data/cue perception (Level 1), meaning comprehension (Level 2), future projection (Level 3) for decision/action selection. SA can be influenced by system elements like personal expertise, training, workload, and the design of interfaces and AI systems.

Lately, the distributed SA (DSA) model stresses systemic SA view. Per DSA, SA spreads across the sociotechnical system, built via agent interactions, human (e.g., clinicians) and non-human (e.g., AI). Crucially, successful performance SA isn't solo-held, different agents hold compatible views on scenarios. Thus, non-human agents like AI can be deemed situationally aware. This has major design implications, including explicit AI SA needs consideration and SA info sharing with people and tech.

SA develops/updates dynamically via SA transactions. Exchanges can be human-human, human-non-human, non-human-non-human (e.g., ECG-AI interaction). E.g., humans transact via talk/non-verbal. Tech transacts with humans via screens, alerts, texts, symbols, states. Humans transact with tech via input, tech with tech via data/protocols. Thus, SA exchange between people/AI and AI/systems is critical design factor. Requires understanding all clinical system component SA needs.

AI use can enhance/erode SA. Often, automation (including AI) aims to lower user workload (next section), but also reduces SA. Users get removed from main info-processing, presented AI recommendation summary. Hard to grasp context/factors (see "Explanation and Trust"). In supervisory roles, attention diverts or workload so low SA diminishes. Uber-Volvo example: safety driver distracted by phone, unaware of pedestrian.

Thus, systematically evaluate different AI design impacts. SA challenges from poor AI choices include workload hikes from extra data/complexity, overreliance leading to poor monitoring. Also, while AI/autonomous agents achieve high accuracy, determining what/how to communicate to clinicians during normal ops for SA maintenance isn't straightforward. Can't address by isolated AI view, as clinicians interact with many (e.g., multiple autonomous pumps), communication design must consider human info needs/limits.

Explore AI design options with SA/agent SA needs in mind. Requires deep domain, system, task understanding. Achievable via task analysis, especially cognitive-focused like cognitive work analysis or applied cognitive task analysis. Might anticipate issues when AI embedded, explore varying AI support/autonomy effects.

AI apps, especially autonomous, need SA too. Autonomous infusion pump needs knowledge of other patient meds affecting physiology/response. Meds via other pumps or clinician-given. "If not documented, didn't happen" critically applies: undocumented/uncommunicated activities to AI don't exist for it, potentially catastrophic.

Various methods assess/measure (human) SA. Apply throughout product/service design lifecycle. Common: situation awareness global assessment technique (SAGAT). Measures SA directly in relevant task/scenario simulations. Scenario interrupted (frozen) at points, participants probed on three SA levels from initial requirements analysis. SAGAT needs in-depth scenario development but offers valuable insights on tech interventions' SA impact.

Workload

Introducing AI apps often intends to lessen healthcare professional workload. E.g., AI chatbot/symptom checker elicits patient info, records, analyzes, summarizes for GP pre-appointment. Supports quick overview, focus on likely diagnosis.

However, AI can unintentionally heighten workload. Known from electronic health records, many notorious for administrative load. AI needs data; independent sensing/perceiving apps need manual feeding – often manual input. AI can increase workload (frustration) if hard to use. Everyday example: time-consuming interactions with voice AI assistants beyond simple queries. Anticipate frustrations in operating theaters/intensive care voice AI.

Workload: demand on person during activity. For assessment, splits into physical/mental (cognitive) workload. Latter relevant for many healthcare activities, e.g., patient monitoring, diagnosis, treatment planning. Workload experiential, person-dependent. Same scenario differently experienced by novice/experienced. Mental workload level key to sociotechnical system safety, reliability, efficiency. Inappropriate levels (high/low) contribute to errors, cause stress/fatigue. Prolonged high stress/fatigue adversely affects staff health/wellbeing.

Many methods assess/measure workload. Useful for evaluating embedding AI design options' impact. Consider various users/stakeholders, full task range.

Automation bias

Automation bias (automation complacency): people tend to trust/rely uncritically on automated systems. E.g., recent partial auto car accidents/simulator studies: people rely on autopilot, time on phones vs. monitoring traffic. Aviation pre-self-driving: accidents from pilots not disengaging malfunctioning autopilot, not monitoring indicators. Post-accident attribution hindsight-biased, unhelpful to label automation bias cause. Better: attentive to human-automation interaction patterns/potential breakdowns, consider in design. Similar healthcare scenarios, e.g., radiology AI leading to overreliance on AI image interpretation. Observed in traditional decision support studies.

AI apps advertised ultra-reliable, expected professionals rely on them, risking automation bias. Studies indicate isolated AI accuracy doesn't predict clinical use with inaccurate outputs.

Important: inform clinicians on algorithm accuracy, design supports human-AI interaction/coordination. Strategies: SA design (SA section), AI decision explanation (Explanation/Trust), training on AI limits/what to watch (Training).

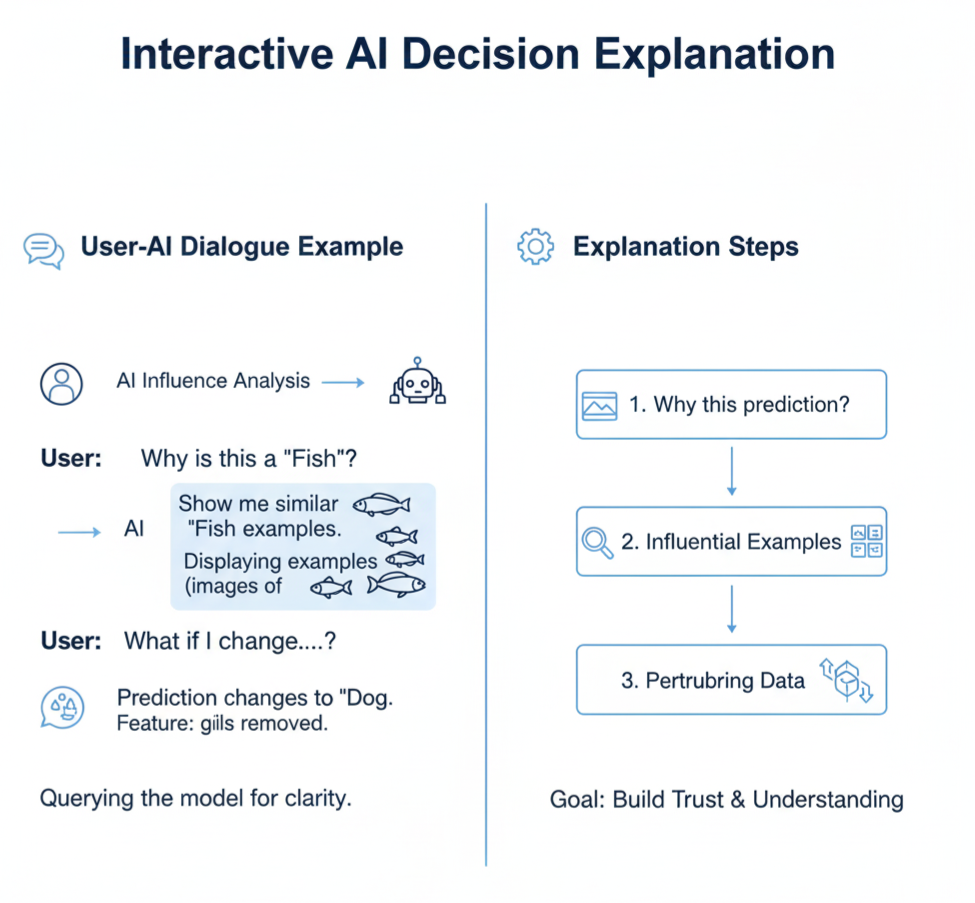

Explanation and trust

An AI oversight body strongly advised that AI tools must explain decisions satisfactorily. The implementation of explainable AI medical systems with AI transparency in medical systems is essential: deploying artificial intelligence systems that substantially impact individual lives is unacceptable unless they can fully explain their decisions.

While AI community focuses on explainable algorithms, agreement on achievement limited. One: use inherently interpretable model-producing algorithms. Usually simpler/linear, may underperform vs. complex like deep learning on broad tasks. Making opaque complex models people-intelligible: map to simpler explanatory model.

Explanation often static post-decision account of AI decision, e.g., highlighting used data features. Assumes AI can abstract/articulate low-level data features into meaningful higher-level for people. Also, AI algorithms correlation-based in datasets, human explanations causation-based. Correlation ≠ causation known, yet humans may misinterpret AI output as "causal", assuming x causes y – actually just relationship.

Thus, explanation better as social process, conversation between agents. Means (a) interactive with dialogue/questions, (b) sufficient/satisfactory explanation context/circumstance-dependent (e.g., patient vs. professional differs).

AI systems should, where suitable, allow user questions/actions for explanation. AI should indicate "how"/"why" decisions made, permit decision interrogation, e.g., "counter-factual" questions ("what if value changed/removed/added?"), enable user-AI dialogue.

Interactive user-centered explanation not just post-decision, includes people as active decision participants. Supports SA, reduces unwarranted AI overreliance, enhances human-AI collaboration.

Human-AI Teaming

AI design can't isolate from users/affected. As AI advances/powerful, assume use/working in professional/patient teams. May not view AI as team member, but computational abilities mean more active/dynamic team role than prior automation. Human-AI teaming key in AI design/implementation/use, but AI-human expertise/intuition/creativity value often underutilized, clinical human-AI teaming challenges underappreciated. Experimental studies suggest AI adversely impacts high-performing team efficacy.

Team members two activity forms: teamwork/taskwork. Teamwork: interact/coordinate for shared goals. Taskwork: individual tasks separate from others. Where people/AI coordinate for health goals, consider teamwork.

Teamwork models overview key human-AI teaming aspects. Big Five teamwork model: effective teams need leadership, mutual monitoring, backup, adaptability, team orientation. Per model, effective if display behaviors with three supports: shared mental models, mutual trust, closed-loop communication.

Big Five behaviors key AI design considerations. E.g., mutual monitoring: track team members' work while own. Designing AI/procedures/protocols, ensure human members understand AI roles/responsibilities, what/why doing, effective performance. More challenging, advanced AI: understand human roles/responsibilities, what/how performing. Especially dynamic AI taking human tasks when overload risk.

Big Five supports also key. Shared mental models: team members share team goals/tasks understanding, individual tasks contribution. Research: teams better coordinate/perform with shared models. As AI advances, ensure appropriate mental model shared human/AI members.

Training

Expertise via frequent exposure/training. Training/skill fade relevant healthcare, professionals value skills. AI/autonomous systems introduction: many clinician tasks routinely AI-taken. E.g., radiologists train large image reviews varied issues, mostly routine – expertise fade if exposed only AI-selected images vs. current broad? Ambulance handlers lose ineffective breathing recognition for cardiac arrest if AI routine?

Autonomous infusion pumps: clinicians maintain complex drug dose calculation skills? Smart pumps already led some nurse skill deterioration.

Maintaining core skills key for confidence overriding/taking over AI. Strategy: regular human manual involvement ("human-in-the-loop", see SA), but practical implementation hard. Backed by designed training like simulation.

Healthcare workers become AI users/co-workers/supervisors (Human-AI Teaming). Need understand AI use, potential weaknesses, safe envelope defined/maintained/breached. Sense AI decisions/actions, provide clinical checks.

Address in design, e.g., interactive explanation interfaces (Explanation/trust). Complemented by training baseline AI work understanding for limitation/problem identification. AI training: configurable aspects, spotting inaccuracies/problems. Better limitation understanding helps over-reliance.

Approaches enable skill practice, AI behavior/limit understanding. But while training essential quality improvement, shouldn't divert from good tech design/effective system interventions.

Interactions Among Personnel and Patients

Policy expectations: AI introduction contributes "returning time gift to clinicians", AI overtakes tasks for patient focus. If well, clinicians more time patient/relative interaction, e.g., treatment explanation. Vital patients, valuing close professional relationships.

But conceivable patient experience worsens when AI overtakes tasks. E.g., infusion adjusting: nurses interact patients, detect needs, provide comfort/companionship. Systemically, consider not just AI-overtaken medical task (autonomous pump), but relationship/psychosocial patient need impacts. Real risk: instead time gift, clinicians pulled other activities. Potentially bedside-away as less manual needed. Then AI introduction might not more humanized care, but isolate patients more.

In some regions, "Shared decision making" guidance applies AI-provided care. Care (AI-involving) must support ongoing patient-provider relationships, recognize patients own-care experts, shared decision partners re care. Patient SA/AI support critical shared decisions.

Ethical Considerations

- HF/E consideration in AI development/use, especially healthcare, must include grave moral challenges for patient security, privacy, fairness/equity. Challenges vary by system type, AI algorithms/data, use. Medical device-classed systems: issues partly addressed regulations/standards (e.g., IEC 62366-1), but AI new/emerging, regulation/standards lag, significant challenges manufacturers/regulators/users face re HF/E principles./li>

- Questions may concern AI operation principles, thus design/development/test. Ongoing work addresses key ethics: bias reduction/avoidance in models, privacy protection, staff/patient/public AI trust maintenance. Another: "appropriate feedback"/transparency from AI, helping user understand result basis (Explanation/trust). Requires user technical competence for assessment (Training).

- Given ethical issue breadth/complexity variation across applications, principle-based approach sensible, identifying high-level areas/objectives for systems/development/use incorporation. Examples AI system principles, healthcare AI application. Arguably, many ethical issues all healthcare apps, AI or not. But AI additional/novel effects many, increasingly complex landscape.

| Principle | Description |

|---|---|

| Transparency | Encompasses explainability, comprehensibility, disclosure. For healthcare, AI transparency for professionals/patients re explaining/justifying AI output. |

| Justice and fairness | Raises fairness, consistency, inclusion, challenge openness. Healthcare AI: data sets used/potential discriminate individuals/groups. |

| Non-maleficence | Harm prevention central healthcare. AI: adverse events not well-identified, complicated by time changes AI components. |

| Responsibility | Clarity moral/legal responsibility AI decisions. |

| Privacy | Privacy/confidentiality, mainly patient data, significant given large AI training sets. |

| Beneficence | Healthcare AI developers justify benefit claims, especially risk-benefit basis. |

| Freedom and autonomy | Data contributors retain control prevent unconsented distribution. AI design support user healthcare decisions. |

| Trust | Fundamental healthcare relationships, fostered when AI consistently meets public expectations, transparent. |

| Sustainability | Healthcare AI development account environment protection, wider commercial ecosystem innovation/competition. |

| Dignity | How people treated, extent human rights respected. AI impact healthcare delivery/experience relevant dignity. |

| Solidarity | Ensure AI not threaten social cohesion, responsive vulnerable persons needs. |

Conclusions

In this document, we outline Citrusbug and its local and worldwide collaborators' vision for Human Factors and Ergonomics in designing and using AI tools in medical environments. Numerous examples already indicate AI's potential to fundamentally reshape medical access and delivery. However, the goal of using AI to boost health system efficiency and improve patient security, experience, and staff health is presently hampered by a limited tech focus opposing people/AI ("human vs. algorithm"), and sparse real-world AI evidence.

Time to surpass this tech-centric perspective, adopt systemic AI approach, i.e., consider human-AI interaction from start within broader medical/health framework. HF/E aspects discussed – situational understanding, workload, automation bias, explanation/trust, human-AI collaboration, training, staff-patient relations, ethical attention – crucial for trustworthy/sustainable AI-enabled healthcare.

AI innovators/developers should view HF/E as core good product design contribution. Reinforced by regulatory expectations following HF/E best practices. AI procurers/users should demand evidence AI works with/for people in specific settings. Research/development funders should promote multidisciplinary studies with HF/E essential. AI study reporting guidelines increasingly consensus on rigorous HF/E practices/evidence inclusion.

Activities underpinned by HF/E education/support for medical professionals/organizations. Some organizations employ embedded HF/E experts working daily with professionals applying HF/E. Embedded HF/E professionals can initiate/sustain systemic interventions. Citrusbug launched September 2023 "Healthcare Human Factors Training Pathway" with health education partners. Pathway supports academic/work-learning routes to accredited Technical HF/E Specialist status. Professional approach aids professionals/organizations effectively apply HF/E in practice – key for successful AI health system embedding.

SaaS Development

SaaS Development Web Application Development

Web Application Development Mobile Application Development

Mobile Application Development Custom Software Development

Custom Software Development Cloud Development

Cloud Development DevOps Development

DevOps Development MVP Development

MVP Development Digital Product Development

Digital Product Development Hire Chatbot Developers

Hire Chatbot Developers Hire Python Developers

Hire Python Developers Hire Django Developers

Hire Django Developers Hire ReactJS Developers

Hire ReactJS Developers Hire AngularJS Developers

Hire AngularJS Developers Hire VueJS Developers

Hire VueJS Developers Hire Full Stack Developers

Hire Full Stack Developers Hire Back End Developers

Hire Back End Developers Hire Front End Developers

Hire Front End Developers AI Healthcare Software Development & Consulting

AI Healthcare Software Development & Consulting Healthcare App Development

Healthcare App Development EHR Software Development

EHR Software Development Healthcare AI Chatbot Development

Healthcare AI Chatbot Development Telemedicine App Development Company

Telemedicine App Development Company Medical Billing Software Development

Medical Billing Software Development Fitness App Development

Fitness App Development RPM Software Development

RPM Software Development Medicine Delivery App Development

Medicine Delivery App Development Medical Device Software Development

Medical Device Software Development Patient Engagement Software Solutions

Patient Engagement Software Solutions Mental Health App Development

Mental Health App Development Healthcare IT Consulting

Healthcare IT Consulting Healthcare CRM Software Development

Healthcare CRM Software Development Healthcare IT Managed Services

Healthcare IT Managed Services Healthcare Software Testing services

Healthcare Software Testing services Medical Practice Management Software

Medical Practice Management Software Outsourcing Healthcare IT Services

Outsourcing Healthcare IT Services IoT Solutions for Healthcare

IoT Solutions for Healthcare Medical Image Analysis Software Development Services

Medical Image Analysis Software Development Services Lending Software Development Services

Lending Software Development Services Payment Gateway Software Development

Payment Gateway Software Development Accounting Software Development

Accounting Software Development AI-Driven Banking App Development

AI-Driven Banking App Development Insurance Software Development

Insurance Software Development Finance Software Development

Finance Software Development Loan Management Software Development

Loan Management Software Development Decentralized Finance Development Services

Decentralized Finance Development Services eWallet App Development

eWallet App Development Payment App Development

Payment App Development Money Transfer App Development

Money Transfer App Development Mortgage Software Development

Mortgage Software Development Insurance Fraud Detection Software Development

Insurance Fraud Detection Software Development Wealth Management Software Development

Wealth Management Software Development Cryptocurrency Exchange Platform Development

Cryptocurrency Exchange Platform Development Neobank App Development

Neobank App Development Stock Trading App Development

Stock Trading App Development AML software Development

AML software Development Web3 Wallet Development

Web3 Wallet Development Robo-Advisor App Development

Robo-Advisor App Development Supply Chain Management Software Development

Supply Chain Management Software Development Fleet Management Software Development

Fleet Management Software Development Warehouse Management Software Development

Warehouse Management Software Development LMS Development

LMS Development Education App Development

Education App Development Inventory Management Software Development

Inventory Management Software Development Property Management Software Development

Property Management Software Development Real Estate CRM Software Development

Real Estate CRM Software Development Real Estate Document Management Software

Real Estate Document Management Software Construction App Development

Construction App Development Construction ERP Software Development

Construction ERP Software Development