Ethical AI in Software Development: Building Products That Feel Human

- September 18, 2025

-

666 Views

- by Ishan Vyas

Software development today heavily relies on Artificial Intelligence (AI) when it comes to building robust and future-proofed products. The pace at which AI adoption is growing leads to ethical loopholes that companies can overcome and eliminate using ethical AI practices.

Since users are leaning towards an environment that combines AI and human touch, you must prioritize the integration of ethical AI during the software development process. AI-driven products must prioritize transparency, fairness, and trustworthiness to be able to transition from a standard deployment to a more accountable approach.

Let’s cover the basics of ethical AI software development and the parameters that can make your AI products seem more human to users.

Ethical AI Development: Understanding What Makes AI “Human”

As AI has started gaining more popularity in content and development, the biggest question on everyone’s mind has been: What are the ethical ramifications of this AI adoption boom?

Ethical AI is a concept that heavily relies on balancing machine intelligence and efficiency with human values such as empathy, transparency, and autonomy during AI development. This is so relevant currently, as over 32.1% of companies consider transparency to be a top ethical concern in 2025. Considering the number has all but doubled from the previous year, we can take it as a clear indication of AI threatening the human element in processes.

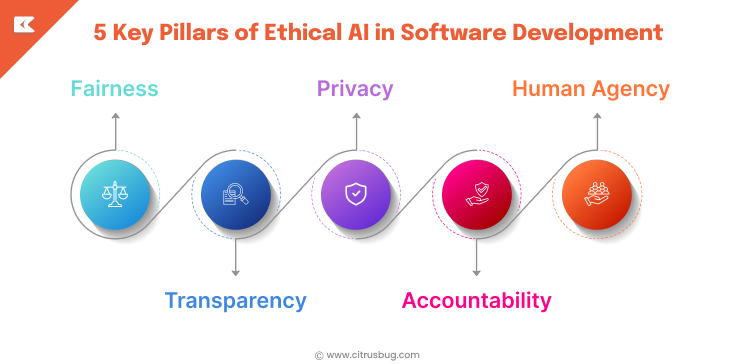

5 Key Pillars of Ethical AI in Software Development

When you think of integrating AI into the software development cycle or a product, you can consider endless use cases, but establishing a strong foundation is your first priority. An important part of that process is defining the core principles that will govern the integration of AI in your product.

With that in mind, some of the key pillars for ethical AI to consider during software development are:

- Fairness: Mitigate bias and ensure equitable outcomes across demographics and categories, and maintain consistency in output.

- Transparency: Make decisions and model behaviors that are understandable to all users and stakeholders

- Privacy: Protect sensitive and personal data by default.

- Accountability: Assign clear responsibilities for different kinds of outcomes, successes, and failures

- Human Agency: Empower users with complete control and oversight over AI recommendations

8 Actionable Ways to Integrate Ethical AI in Software Development

Since we have now looked at the role of Ethical AI and the importance of making AI as human as possible, let us look at the most actionable ways to integrate it into software development cycles.

#1 Conduct Ethical Risk Assessments in All Stages

To ensure that you always have deeper insights into the ethics of AI usage in a typical software development cycle. From ideation to deployment and beyond, you must conduct ethical risk assessments throughout the entire cycle.

Over 60% of companies have now established ethical AI guidelines or review boards, according to a global survey conducted in 2025. Companies can also utilize checklists and play out potential scenarios to proactively flag risks, audit datasets, and model outcomes regularly to instill bias and fairness.

For example, Microsoft has established an internal AI Ethics Committee to conduct detailed assessments and review new projects as they are launched.

#2 Leverage Frameworks and Robust Data Governance

Implementing strong frameworks, such as UNESCO recommendations and industry regulations, can help you create and standardize ethical practices associated with AI usage. 72.2% companies currently report that they know and comply with AI regulations in 2025.

For instance, Google has defined AI principles that can help it avoid harm, maintain fairness, and ensure privacy across its data pipeline.

Adopt global ethical frameworks such as the EU AI Act and UNESCO regulations by setting down the blueprints that govern your AI usage. You can also implement clear and structured data governance policies that protect the data that you collect, store, and use.

#3 Utilize Cross-functional Teams to Drive Development

Companies today must strive to create cross-functional teams that combine a wide range of resources, such as ethicists, user advocates, engineers, and designers, to strengthen their software development cycle. A cross-functional team will help you bring up and consider multiple viewpoints in every strategic decision.

Not only this, but cross-functional collaborations are used in over 48% organizations that have a mature and comprehensive AI governance process. Consider creating a dedicated ethics council and making ethical review mandatory when defining product milestones. You can also foster continuous training on privacy, bias, and responsible software development procedures.

For instance, companies like Accenture and IBM merge several internal and external ethics panels that help resolve ethical dilemmas and define best practices for AI usage.

#4 Balance Automation With Manual Oversight

By establishing automated systems to help you navigate your AI processes, you can enhance efficiency and flag any unchecked automation workflows. These automation workflows can magnify biases and even cause catastrophic failures if left unattended for way too long. Moreover, human-in-the-loop (HITL) frameworks can help ensure that humans retain the key decision-making rights, especially in critical contexts.

You can even redesign your workflows to incorporate AI suggestions without influencing any of the high-impact and high-value decisions. You must also flag uncertain or “edge” cases routinely to prioritize human review, and use logs and AI techniques to audit automated outputs.

Besides, when creating any kind of content around your software (such as internal documentation, customer-facing knowledge base, etc.), consider using a human-like AI writing tool that can help you produce more engaging, human-grade content as opposed to robotic-sounding fluff produced by tools like ChatGPT.

#5 Explain Decisions in a Clear and Accessible Language

Before the age of AI, explaining the rationale behind important and strategic decisions was pretty straightforward. But as the lines between AI-generated insights and decisions founded on deep research get blurry, explainable AI is the need of the hour.

With a large number of companies reporting lack of transparency as the biggest challenge in implementing AI, backing AI recommendations and insights with clear data and explanations will help you stand out. To implement this practice, consider replacing highly technical and complex explanations with simple and visual summaries.

For example, Salesforce and Apple provide user dashboards that explain AI-driven recommendations and allow users more control over decisions based on these insights and recommendations.

#6 Retain Control in Users’ Hands

Users often feel a loss of control when using AI, sometimes due to the sheer speed and productivity a typical AI application can deliver. Ethical AI applications, when used correctly, aim to empower people rather than replace or manipulate them into believing that AI output is superior to human output.

Users today expect privacy protocols and AI-powered fraud detection features to be presented to them by design and granular controls that can help them customize their experience. You must consider providing easy and simple mechanisms that help users adjust AI settings or even switch them off — basically, custom controls that empower them to utilize the features fully.

One way you can go about it is by including privacy dashboards and consent prompts in your product, and customizing user experiences based on user input and needs.

#7 Predict Evolving Ethical Challenges With AI Advancements

By 2030, the global AI market is expected to grow at a CAGR of 35.9%. Basically, the pace at which AI is currently advancing means there is no predicting or planning for the kind of ethical risks you might deal with in the future. To ensure that major ethical risks do not take you by surprise, you must invest in ongoing analysis and monitoring of trends and processes.

You must regularly scan your AI-powered processes and workflows for emerging risks, such as algorithmic discrimination, deepfakes, and more. When you are building an AI-powered product, consider having an ethicist or partnering with advisory groups who can help you come up with necessary policies. Also, run simulated exercises on new environments so that you can test out the resilience of your product and AI workflows.

#8 Adopt an Iterative, Proactive Mindset

Contrary to what a lot of companies believe, ethical AI is not a one-time commitment or checklist. Investing in feedback and continuous learning is essential for industry leaders and companies that want to truly benefit from ethical AI.

Companies must conduct regular audits and monitor the outcomes to ensure that the ethical protocols align with the evolving needs of users. To ensure that this happens, companies must collect feedback regularly and act on the common user issues and pain points. Publishing transparency reports that help you address failures and solutions openly can also help you establish more credibility among users.

The more proactive you are about transparency and fairness, the more you can humanize your product and user experience.

Concluding Remarks

Implementing Ethical AI and related practices in your software development process is not optional if you want to create sustainable and innovative products. Building products that are transparent, human-centered, and accountable can help you earn user trust easily, and comply with evolving regulations at the same time. With organizations rushing to double down on their AI investments in the next few years, prioritizing ethics with your AI implementation will help you have more impact on your users.

SaaS Development

SaaS Development Web Application Development

Web Application Development Mobile Application Development

Mobile Application Development Custom Software Development

Custom Software Development Cloud Development

Cloud Development DevOps Development

DevOps Development MVP Development

MVP Development Digital Product Development

Digital Product Development Hire Chatbot Developers

Hire Chatbot Developers Hire Python Developers

Hire Python Developers Hire Django Developers

Hire Django Developers Hire ReactJS Developers

Hire ReactJS Developers Hire AngularJS Developers

Hire AngularJS Developers Hire VueJS Developers

Hire VueJS Developers Hire Full Stack Developers

Hire Full Stack Developers Hire Back End Developers

Hire Back End Developers Hire Front End Developers

Hire Front End Developers AI Healthcare Software Development & Consulting

AI Healthcare Software Development & Consulting Healthcare App Development

Healthcare App Development EHR Software Development

EHR Software Development Healthcare AI Chatbot Development

Healthcare AI Chatbot Development Telemedicine App Development Company

Telemedicine App Development Company Medical Billing Software Development

Medical Billing Software Development Fitness App Development

Fitness App Development RPM Software Development

RPM Software Development Medicine Delivery App Development

Medicine Delivery App Development Medical Device Software Development

Medical Device Software Development Patient Engagement Software Solutions

Patient Engagement Software Solutions Mental Health App Development

Mental Health App Development Healthcare IT Consulting

Healthcare IT Consulting Healthcare CRM Software Development

Healthcare CRM Software Development Healthcare IT Managed Services

Healthcare IT Managed Services Healthcare Software Testing services

Healthcare Software Testing services Medical Practice Management Software

Medical Practice Management Software Outsourcing Healthcare IT Services

Outsourcing Healthcare IT Services IoT Solutions for Healthcare

IoT Solutions for Healthcare Medical Image Analysis Software Development Services

Medical Image Analysis Software Development Services Lending Software Development Services

Lending Software Development Services Payment Gateway Software Development

Payment Gateway Software Development Accounting Software Development

Accounting Software Development AI-Driven Banking App Development

AI-Driven Banking App Development Insurance Software Development

Insurance Software Development Finance Software Development

Finance Software Development Loan Management Software Development

Loan Management Software Development Decentralized Finance Development Services

Decentralized Finance Development Services eWallet App Development

eWallet App Development Payment App Development

Payment App Development Money Transfer App Development

Money Transfer App Development Mortgage Software Development

Mortgage Software Development Insurance Fraud Detection Software Development

Insurance Fraud Detection Software Development Wealth Management Software Development

Wealth Management Software Development Cryptocurrency Exchange Platform Development

Cryptocurrency Exchange Platform Development Neobank App Development

Neobank App Development Stock Trading App Development

Stock Trading App Development AML software Development

AML software Development Web3 Wallet Development

Web3 Wallet Development Robo-Advisor App Development

Robo-Advisor App Development Supply Chain Management Software Development

Supply Chain Management Software Development Fleet Management Software Development

Fleet Management Software Development Warehouse Management Software Development

Warehouse Management Software Development LMS Development

LMS Development Education App Development

Education App Development Inventory Management Software Development

Inventory Management Software Development Property Management Software Development

Property Management Software Development Real Estate CRM Software Development

Real Estate CRM Software Development Real Estate Document Management Software

Real Estate Document Management Software Construction App Development

Construction App Development Construction ERP Software Development

Construction ERP Software Development